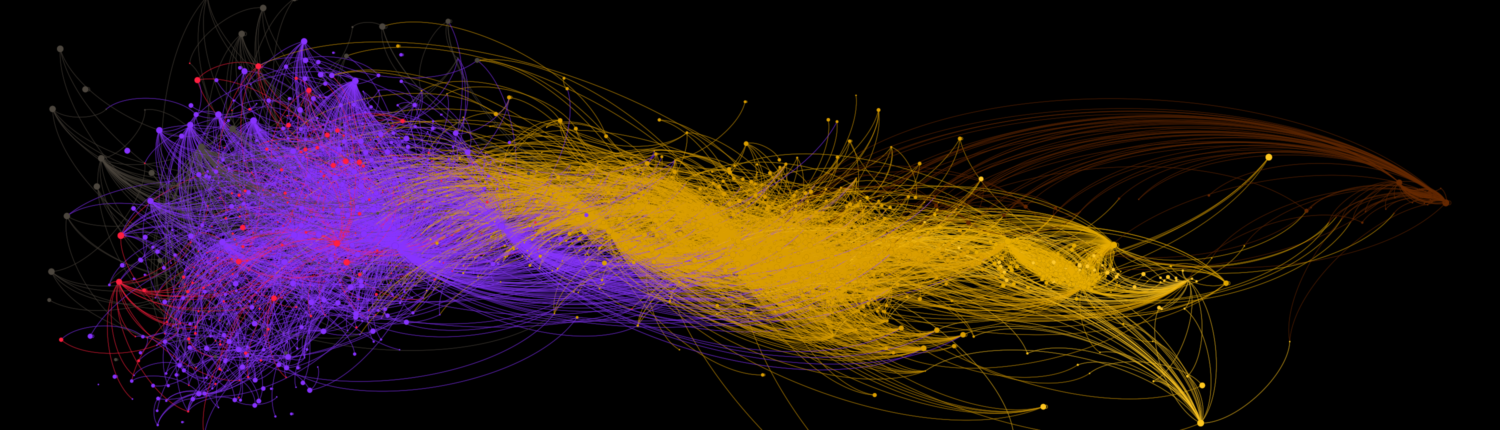

Sociopics are generated from social interactions harvested on social media (most of sociopics here are made from Twitter data). These videos make a focus on particular moment of our collective lives by showing the sonified interactions of social media accounts that discuss a particular topic of belong to some specific social groups.

On these videos, each social media account is represented by a colored disc and interactions between accounts (i.e. retweet) are represented by dynamics links.

A global network analysis is performed to identify social groups which accounts are colored the same way. The temporal activity of social group is turned into sound by attributing to each activity intensity a music note and length and attributing to each social group an instrument or sound texture. Sound and image are then synchronized to allow to both see and listen to social dynamics.

Tools used to generate sociopics : programming languages (generation of the temporal graphs and conversion of communities activities into midi), DAW (for orchestration, generation of the soundtracks and mastering), Gephi (dynamics graph visualisation software), video capture software (to convert Gephi visualizations into video), video editing tool (synchronization of sound and video ; video annotation).